Introduction

The OCI GenAI service offers access to pre-trained models, as well as allowing you to host your own custom models.

The following OOTB models are available to you -

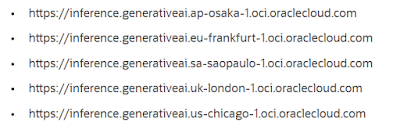

OIC GenAI service is currently available in 5 regions -

The service offers I will be using the Chicago endpoint in this post.

The objective of the post is to detail how easy it is to invoke the chat interface from an integration. I will detail this both for Cohere and llama.

Pre-Requisites

First step - generate private and public keys -

openssl genrsa -out oci_api_key.pem 2048

and

openssl rsa -pubout -in oci_api_key.pem -out oci_api_key_public.pem

I then login to the OCI Console and create a new api key for my user -

User Settings - API Keys -

I then choose and upload the public key I just created - I copy the Fingerprint, for later use.I also created the following Policy -

allow group myAIGroup to manage generative-ai-family in tenancy

Invoking GenAI chat interface from OIC

Now to OIC where I create a new project - adding 2 connections -

OCI GenAI connection is configured as follows - note the base url -https://inference.generativeai.us-chicago-1.oci.oraclecloud.com

As already mentioned, OCI GenAI is currently available in 5 regions. Check out the documentation for the endpoint for other regions.

This integration will leverage the Cohere LLM - The invoke is configured as follows -The Request payload is as follows -"compartmentId" : "ocid1.compartment.yourOCID",

"servingMode" : {

"modelId" : "cohere.command-r-08-2024",

"servingType" : "ON_DEMAND"

},

"chatRequest" : {

"message" : "Summarize: Hi there my name is Niall and I am from Dublin",

"maxTokens" : 600,

"apiFormat" : "COHERE",

"frequencyPenalty" : 1.0,

"presencePenalty" : 0,

"temperature" : 0.2,

"topP" : 0,

"topK" : 1

}

}

The Response payload -

"modelId" : "cohere.command-r-08-2024",

"modelVersion" : "1.7",

"chatResponse" : {

"apiFormat" : "COHERE",

"text" : "Niall, a resident of Dublin, introduces themselves.",

"chatHistory" : [ {

"role" : "USER",

"message" : "Summarize: Hi there my name is Niall and I am from Dublin"

}, {

"role" : "CHATBOT",

"message" : "Niall, a resident of Dublin, introduces themselves."

} ],

"finishReason" : "COMPLETE"

}

}

I test the integration -

The invoke is configured with the following request / response -

Request -

"compartmentId" : "ocid1.compartment.yourOCID",

"servingMode" : {

"modelId" : "meta.llama-3-70b-instruct",

"servingType" : "ON_DEMAND"

},

"chatRequest" : {

"messages" : [ {

"role" : "USER",

"content" : [ {

"type" : "TEXT",

"text" : "who are you"

} ]

} ],

"apiFormat" : "GENERIC",

"maxTokens" : 600,

"isStream" : false,

"numGenerations" : 1,

"frequencyPenalty" : 0,

"presencePenalty" : 0,

"temperature" : 1,

"topP" : 1.0,

"topK" : 1

}

}

Response -

"modelId" : "meta.llama-3.3-70b-instruct",

"modelVersion" : "1.0.0",

"chatResponse" : {

"apiFormat" : "GENERIC",

"timeCreated" : "2025-04-09T09:39:40.145Z",

"choices" : [ {

"index" : 0,

"message" : {

"role" : "ASSISTANT",

"content" : [ {

"type" : "TEXT",

"text" : "Niall from Dublin introduced himself."

} ],

"toolCalls" : [ "1" ]

},

"finishReason" : "stop",

"logprobs" : {

"1" : "1"

}

} ],

"usage" : {

"completionTokens" : 9,

"promptTokens" : 52,

"totalTokens" : 61

}

}

}